Numbers are used to express a concept - for example “seven times more” or “seventy-seven times” are often used to say “a lot” - and make up a unique and interesting feature to be uncovered. Every word has its meaning in a specific context. Since the Bible comes from oral tradition there are a lot of recurrent patterns. I’ll leave for you to analyse and see the difference in the Gospels’ word clouds and also appreciate the difference in tones between the Gospels, the Genesis and the Apocalypse book (this is the last Bible’s book, that may have been written 100 years after Christ). The First Book of Kings, The First Book of Paralipomenon etc).įig.5: Comparison of word cloud among the first 3 Old Testament’s books. Old Testament’s books are in old_books and multinames list denotes all those books that have multiple versions (e.g. The input file is read as a single string with. After this step, each book starts with the pattern Book_name Chapter 1 along with verses numbers and text.įig.1 shows how I subdivided all the books into single files.

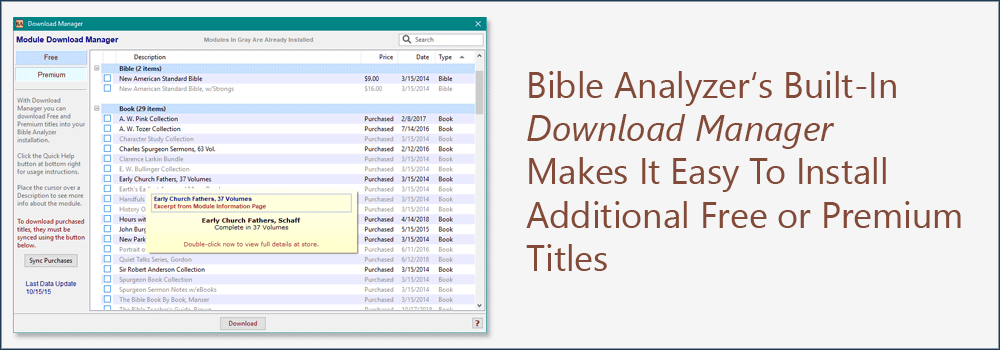

#Module creation for bible analyzer manual

I performed a simple manual cleaning, taking out the introduction, the title and the introduction for each book, and the final Gutenberg’s end disclaimers. Preprocessing is the very first thing - we should always keep in our mind! Here the raw input file is cleaned and books are subdivided into single files.

#Module creation for bible analyzer install

So you can install ahead and import just once. These are all the packages that will be using through the two articles: import re import nltk nltk.download('stopwords') from rpus import stopwords import glob import string import gensim from rpora import Dictionary from gensim.utils import simple_preprocess from gensim.models import CoherenceModel, Phrases, LdaModel from import LdaMulticore import pandas as pd from num2words import num2words import numpy as np from wordcloud import WordCloud from sklearn.feature_extraction.text import CountVectorizer import seaborn as sbn from matplotlib.pyplot as plt from scipy.stats import hmean from scipy.stats import norm In this first part we’ll tackle: data cleaning, data extraction from corpus, and Zipf’s law. This project wants to be a gentle introduction to the NLP world, taking the reader through each step, from cleaning raw input data to getting and understanding books’ salient features. The main books in this part are the 4 gospels by Mark, Matthew, Luke, and John and the Apocalypse (or Revelation). The second part is the New Testament, 28 books, that tell the history of Christ and his disciples and the future of Earth till the Apocalypse. The first part of the Bible is the Old Testament, made of 46 books, which tells the history of the Israelites (Pentateuch), the division between bad and good (Wisdom books), and the books of prophets. The Douay-Rheims Bible is the translation from the Latin Vulgate to English, and counts a total of 74 books. The Bible can be freely downloaded in text format from the Gutenberg project ( Gutenberg License) Since Easter is approaching I thought of a nice and fun data science project, applying NLP techniques to the Bible.

0 kommentar(er)

0 kommentar(er)